[ad_1]

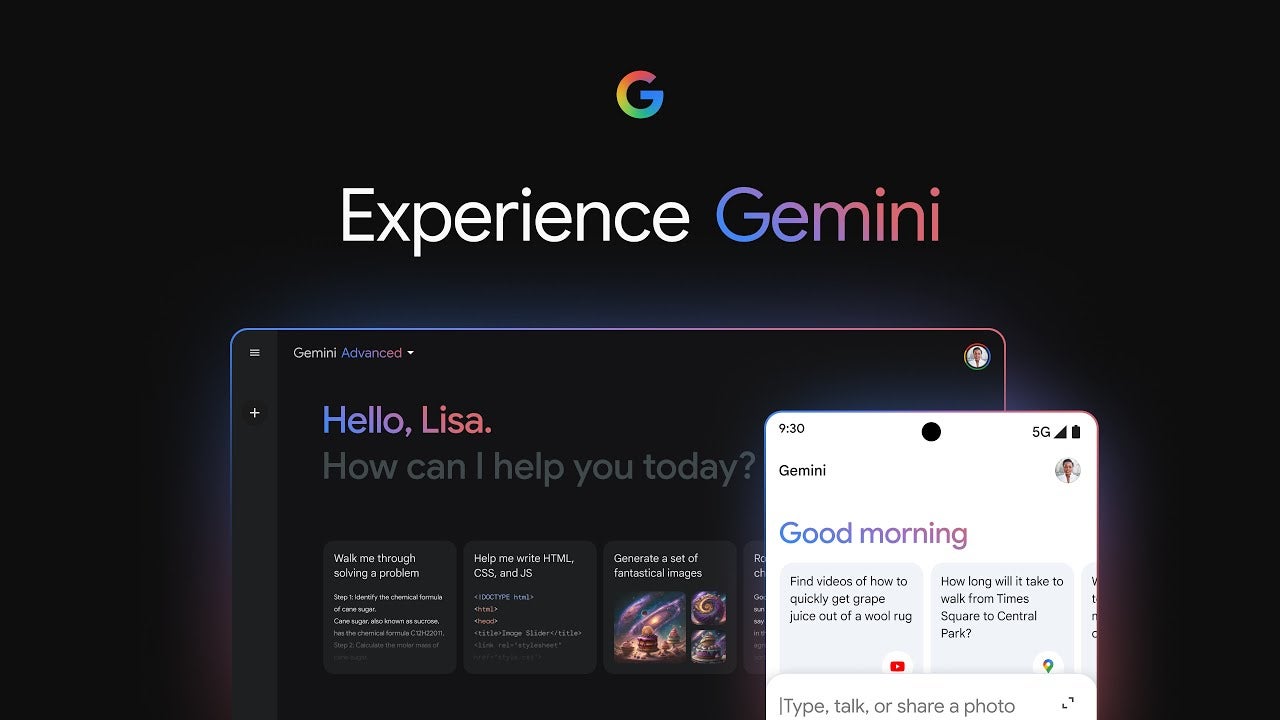

Gemini can now listen to and understand audio files

Maybe you know but the more data you feed AI, the better it becomes (and freakier, if you’re one of the more skeptical people). At first, the training of the AI models was basically done via text – especially important for chatbots. However, AI models then learned to process image data, and can now be used to reconstruct an image (or create a whole new image upon your prompt). Gemini (which used to be called Bard for those of you who don’t know) has been able to process images, and now it’s growing towards audio format. The version that does that, Gemini 1.5 Pro, is currently in testing. This opens up a world of possibilities – like summaries of a long keynote, conversation, earnings call, lectures, and similar things. You’ll be able to upload the file to Gemini.

Tools to summarize long calls exist. But what they do is transcribe the call first and then summarize it. However, Gemini will listen to the call.

Don’t be quick to get excited though – for now, this won’t be available as a public release. For you to use it, you will need Google’s development platform Vertex AI or if you’re using AI Studio. It’s bound to make it to the public as well, but we don’t know when.

All in all, witnessing the growth of AI is seriously exciting. If you’re one of the people who fear it will rule the world one day – don’t be too scared. The way I see it – it’s here to make our lives easier and give us more space to fulfill our potential as intelligent and also intuitive and creative human beings. It will just ensure we won’t have to waste precious time with the boring stuff (like listening to a long earnings call, you know).

[ad_2]

Source link