[ad_1]

2023 has truly been the year where artificial intelligence makes its way into our daily lives. Companies like Google and Microsoft really push to expand its boundaries. Now, in line with these efforts, Google is introducing a new AI visual try-on experience. This would accurately visualize apparel on various body types and help users find the perfect item, thus solving one of the biggest problems for online shoppers.

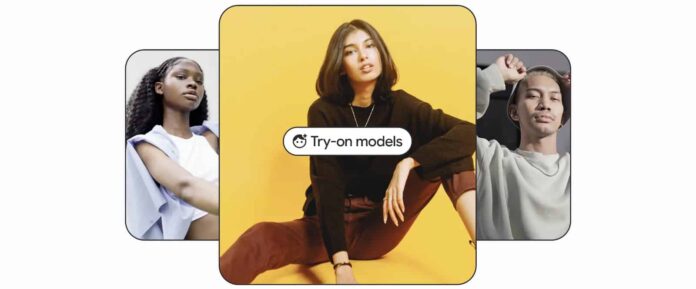

Launched as an update to Google Shopping, the visual try-on experience uses Google’s internal diffusion models to enable shoppers to try on garments and see how they would appear on a diverse range of real-life models. Additionally, the model also encompasses a broad spectrum of skin tones, ethnicities, hair types, and body shapes from XXS to 4XL, therefore ensuring that users can always find the representation that closely aligns with their physique.

How did Google train the new model?

When it comes to training, Google states they used pairs of images featuring individuals wearing garments in distinct poses. For example, an image of someone wearing a shirt while standing sideways would be paired with another image of them standing forward, and so on. Additionally, the company also used random pairs of images of garments and people to make the model more robust and helpful.

Although the virtual try-on experience will initially focus on women’s tops from renowned brands like H&M, Anthropologie, Everlane, and Loft, Google has already announced plans to expand the feature to include men’s tops and other types of apparel shortly.

Reasoning behind the new feature

Google says their motivation to launch the visual try-out experience stemmed from a survey that highlighted the fact that over 42% of online shoppers didn’t feel represented by the images of models, and over 59% were disappointed with their online purchases due to differences in appearance.

“When you try on clothes in a store, you can immediately tell if they’re right for you. You should feel just as confident shopping for clothes online,” said Lilian Rincon, the senior director of consumer shopping products at Google.

In addition to the new visual try-on feature, Google is also debuting new filters that leverage the power of machine learning algorithms to enable users to refine their searches based on specific criteria, such as color, style, and patterns. Ultimately helping them to discover new products and make purchases.

[ad_2]

Source link