[ad_1]

Three apps that generated $30 million in revenue last year had multiple user reviews mentioning sexual abuse

Levine and 12 other computer scientists did some digging and discovered that out of the 550 social networking apps offered by the App Store and the Google Play Store, 20% of them had two or more complaints in their reviews about content that was characterized as “child sexual abuse material.” A whopping 81 apps had seven or more such complaints. Levine says that Apple and Google need to do a better job of giving parents information about such apps and policing their app storefronts to kick out such titles.

Hoop, one of the apps said to be unsafe to children by the App Danger Project, remains in the App Store and Play Store

The Justice Department, in more than a dozen criminal cases in various states, described the three apps as “tools” used by subscribers to ask children to send them sexual images or to meet with them. And some apps that are a danger to children remain in the App Store and Google Play Store according to a computer scientist named Hany Farid who worked with Mr. Levine on the App Danger Project.

Apple also investigated the App Store apps listed by the website and ended up kicking out 10 apps although it won’t reveal their names. “Our App Review team works 24/7 to carefully review every new app and app update to ensure it meets Apple’s standards,” said a company spokesman.

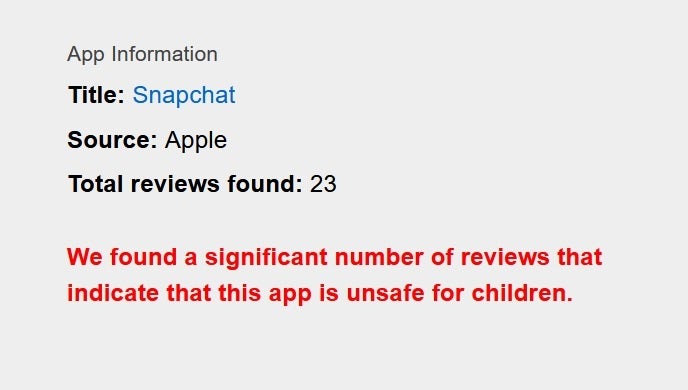

Snapchat is also on the App Danger Project list as being “unsafe for children.”

Hoop, one of the apps on the App Danger Project list as being a danger to kids, had 176 reviews out of the 32,000 posted since 2019 mention sexual abuse. One such review pulled from the App Store said, “There is an abundance of sexual predators on here who spam people with links to join dating sites, as well as people named ‘Read my picture.’ It has a picture of a little child and says to go to their site for child porn.”

The App Danger Project says Snapchat is unsafe for children

The app, now under new management, says it has a new content moderation system making the app safer. Liath Ariche, Hoop’s chief executive, noted that the app has learned how to deal with bots and malicious users. “The situation has drastically improved,” he says. MeetMe parent The Meet Group told the Times that it doesn’t tolerate abuse or exploitation of minors and Whisper did not respond to requests for comment.

It should be noted that apps like WhatsApp and Snapchat are also on the App Danger Project website, both listed as being “unsafe for children.” One could argue that the App Danger Project is being too sensitive, but when it comes to children many would answer that zero tolerance is the only way to protect the children.

[ad_2]

Source link