[ad_1]

As the company notes, “Since the early days of Search, AI has helped us with language understanding, making results more helpful. Over the years, we’ve deepened our investment in AI and can now understand information in its many forms — from language understanding to image understanding, video understanding and even understanding the real world. Today, we’re sharing a few new ways we’re applying our advancements in AI to make exploring information even more natural and intuitive.”

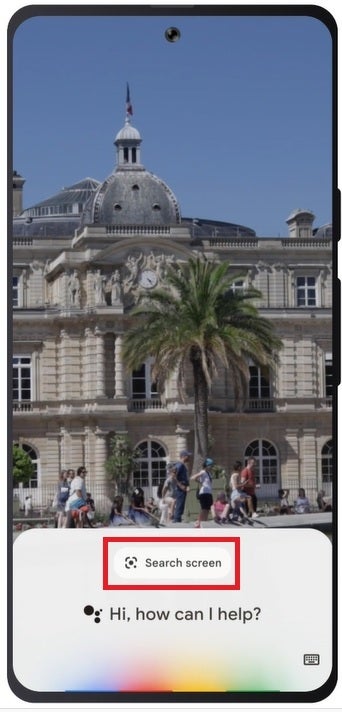

Google Lens will now “search your screen”

So the first Google feature that we will talk about is Google Lens, which is an AI-powered search engine that uses your photos or live camera previews instead of words. Want to be surprised? Lens is used over 10 billion times each month. An update to Lens will be disseminated over the upcoming months that will allow Android users to “search your screen.” After the update, you’ll be able to use Lens to search photos and videos from websites and messaging and video apps without having to leave the app. Cool.

Google Lens will allow Android users to search photos and videos from websites and apps

Multisearch will soon be available for local searches “near you”

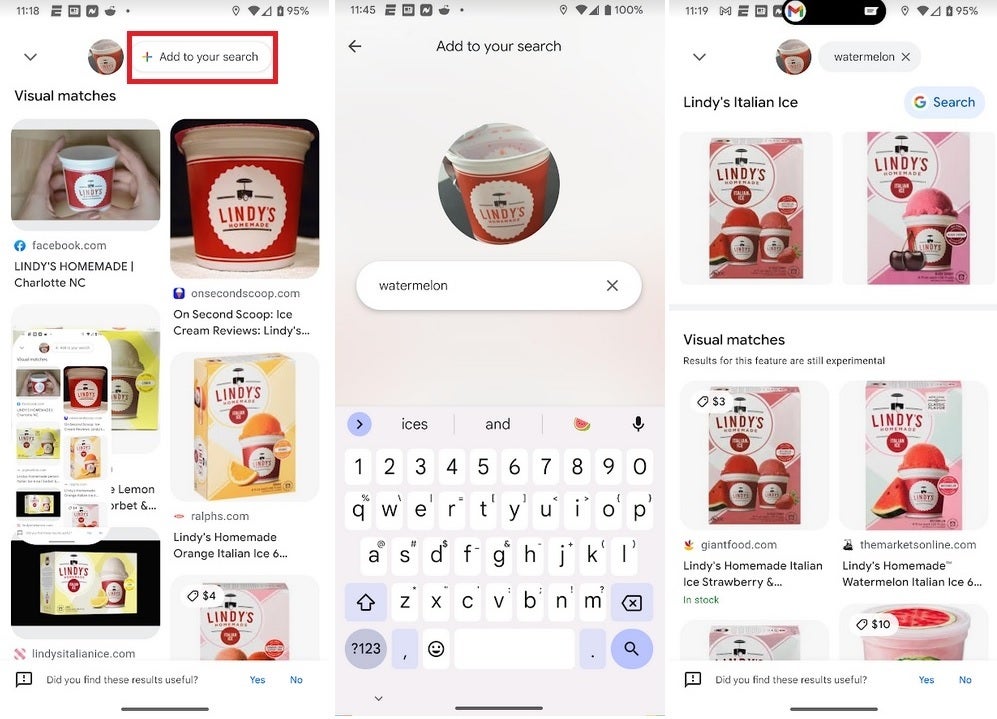

After you snap the image the results partially appear on the bottom of the screen. Drag the tab holder up and you’ll see the results of your Lens Search with a button on top that says “+Add to your search.” Tap on that button and a field will appear where you can add text. In this case, we want to find Lindy’s watermelon ice, so we type “watermelon” and tap the magnifying glass icon at the bottom right of the QWERTY (where “Enter” would normally be) and you’ll have a more targeted result page.

Multisearch on Google Lens allows you to search images and texts together

Multisearch is available globally on mobile and in all languages and countries where you can use the Google Lens feature. But you can now search locally by adding the words “near me” to a Lens search to find what you want closer to you. This feature is available right now in English for U.S. users and is being added globally in the coming months. And even more exciting, in the coming months you’ll be able to use multisearch on any image found on the mobile Google search results page.

[ad_2]

Source link