[ad_1]

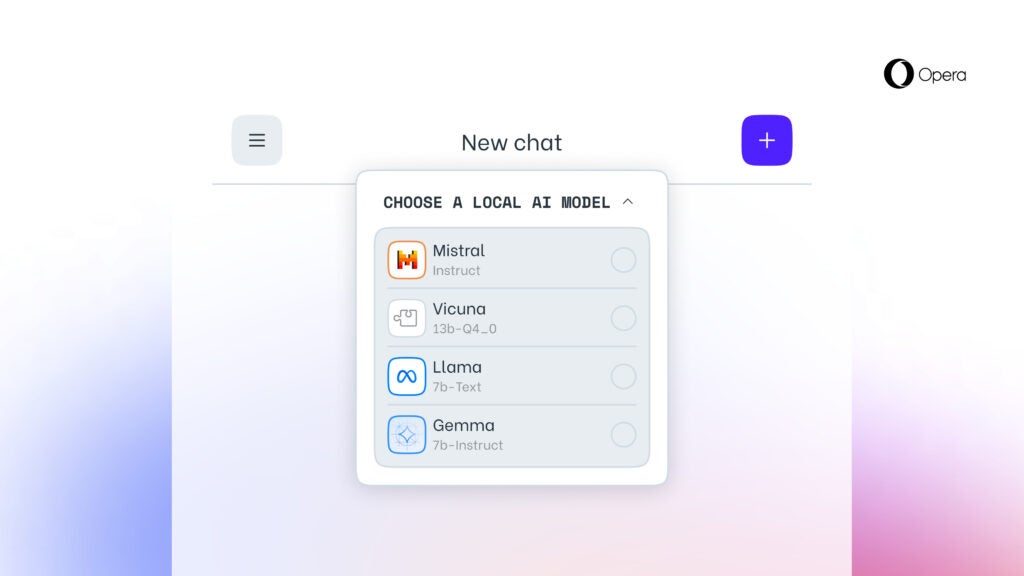

By adding experimental support for 150 local LLM (Large Language Model) variants from about 50 families of models, the developer makes it possible for users to access and manage local LLMs directly from its browser.

According to Opera, the local AI models are a complimentary addition to Opera’s online Aria AI service, which is also available in the Opera browser on iOS and Android. The supported local LLMs include names like Llama (Meta), Vicuna, Gemma (Google), Mixtral (Mistral AI), and many more.

Keep in mind that after you select a certain LLM, it will be downloaded on your device. It’s also important to add that a local LLM typically requires 2-10GB of storage space per variant. Once it’s downloaded on your device, the new LLM will be used instead of Aria, Opera’s native browser AI.

[ad_2]

Source link