[ad_1]

ChatGPT and similar Large language models (LLMs) can be used to write texts about any given subject, at any desired length at a speed unmatched by humans.

So it’s not a surprise that students have been using them to “help” write assignments, much to the dismay of teachers who prefer to receive original work from actual humans.

In fact, in Malwarebytes’ recent research survey, “Everyone’s afraid of the internet and no one’s sure what to do about it,” we found that 40% of people had used ChatGPT or similar to help complete assignments, while 1 in 5 admitted to using it to cheat on a school assignment.

It’s becoming really hard to tell what was written by an actual person, and what was written by tools like ChatGPT, and has led to students being falsely accused of using ChatGPT. However, students that are using those tools shouldn’t be receiving grades that they don’t deserve.

Worse than that could be an influx of so-called scientific articles that either add nothing new or bring “hallucination” to the table—where LLMs make up “facts” that are untrue.

Several programs that can filter out artificial intelligence (AI) texts have been created and tests are ongoing, but the success rate of these, mostly AI-based tools, hasn’t been great.

Many have found the existing detection tools not very effective, especially for professional academic writing. These tools have a bias against non-native speakers. Seven common web-based AI detection tools all identified non-native English writers’ works as AI-generated text more frequently than native English speakers’ writing.

But now it seems as if chemistry scientists have found an important building block in creating more effective detection tools. In a paper titled “Accurately detecting AI text when ChatGPT is told to write like a chemist” they describe how they developed and tested an accurate AI text detector for scientific journals.

Using machine learning (ML), the detector examines 20 features of writing style, including variation in sentence lengths, the frequency of certain words, and the use of punctuation marks, to determine whether an academic scientist or ChatGPT wrote the examined text.

To test the accuracy of the detector, the scientists tested it against 200 introductions in American Chemical Society (ACS) journal style. For 100 of these, the tool was provided with the papers’ titles, and for the other 100, it was given their abstracts.

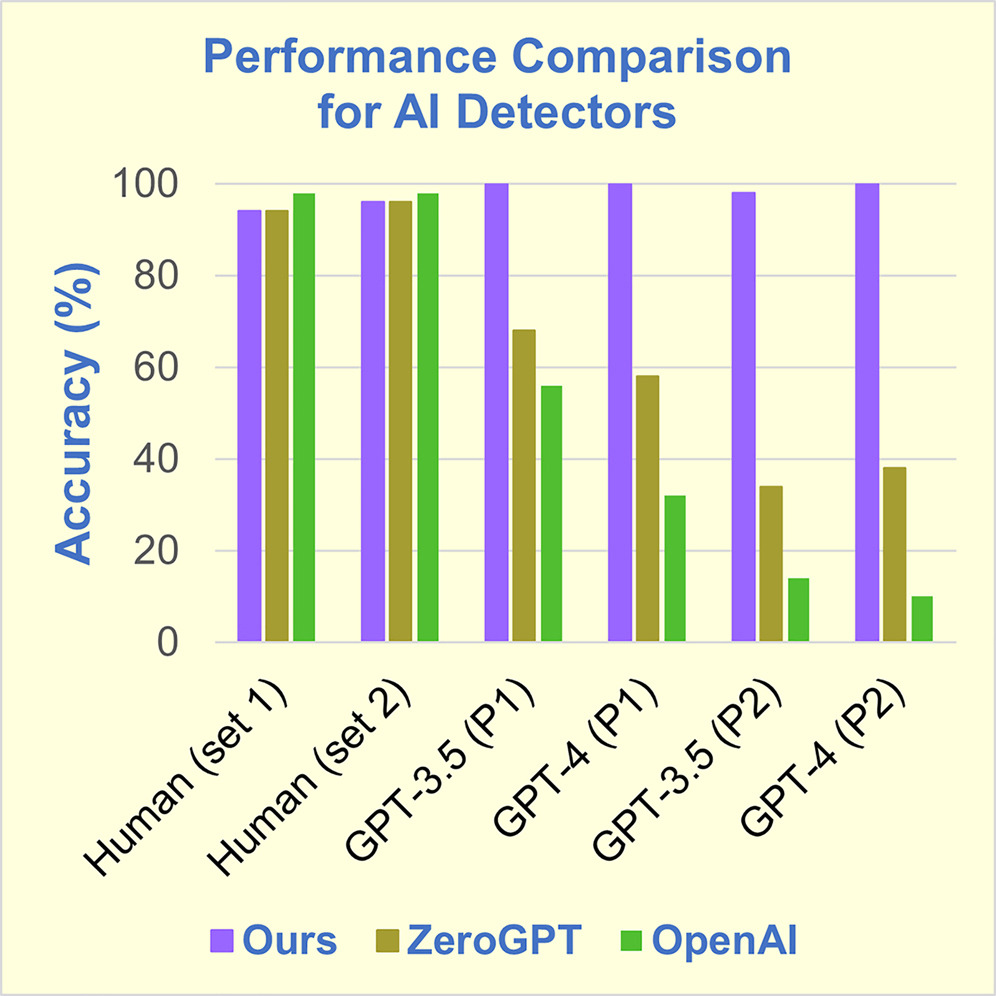

It showed astonishing results. It outperformed the online tools provided by ZeroGPT and OpenAI by identifying ChatGPT-3.5 and ChatGPT-4 written sections based on titles with 100% accuracy. For the ChatGPT-generated introductions based on abstracts, the accuracy was slightly lower, at 98%.

Image courtesy of ScienceDirect

The graph shows the accuracy of three detectors against texts written by humans (to determine the number of false positives), ChatGPT-3.5, and ChatGPT-4. P1 is the texts based on titles and P2 the ones based on abstracts.

What’s important about this research is that it shows that with specialized tools one can achieve a much better detection rate. That could mean that efforts to develop AI detectors could receive a significant boost by tailoring software to specific types of writing.

Once we learn how to quickly and easily build such a specialized tool, we can soon expand the number of areas for which we have specialized detectors. According to one of the researchers, the findings show that “you could use a small set of features to get a high level of accuracy.”

To put this into perspective, the development time to generate the detector was a part-time project, done in approximately one month by a few people. The scientists designed the detector prior to the release of ChatGPT-4, but it works just as effectively on GPT-3.5, so it’s unlikely that future versions would create text in a way that would significantly change the accuracy of this detector.

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.

[ad_2]

Source link